Understanding the BigQuery Pricing Changes

Google’s upcoming billing adjustments aim to align BigQuery pricing with the underlying Cloud Storage costs. While this change promotes transparency, it’s crucial to understand its implications for your BigQuery usage. Let’s break down the two key areas affected:

- Storage Retrieval Fees: If your BigQuery queries access data stored in Nearline, Coldline, or Archive storage classes within Cloud Storage, you’ll now be faced with retrieval fees. These fees vary based on the storage class and the amount of data retrieved.

- Inter-Region Network Data Transfer Fees: When your BigQuery dataset and the Cloud Storage bucket it accesses reside in different regions, you’ll be charged for the data transferred between them. These fees can add up, especially for large datasets or frequent queries.

Assessing Your Potential Costs

Unfortunately, there’s no single, straightforward way to pinpoint the exact costs you’ll face due to the billing changes. However, we can employ a combination of strategies to gain valuable insights:

1. Identify Cloud Storage Buckets Accessed by BigQuery

Analyse BigQuery’s INFORMATION_SCHEMA views to reveal which Cloud Storage buckets your queries interact with. While this doesn’t tell us the bucket locations or storage classes, it’s a crucial first step.

Use this query to list accessed buckets:

SELECT SPLIT(query, 'gs://')[1]

FROM erik-trial.`region-europe-north1`.INFORMATION_SCHEMA.JOBS_BY_PROJECT

WHERE query like '%gs://%' and error_result.reason is nullRemember to run this query for each region where you have BigQuery datasets.

2. Identify Bucket Locations and Storage Classes

To determine if you have buckets in different locations or using Nearline, Coldline, or Archive storage, leverage the gsutil command-line tool in Cloud Shell:

gsutil ls | xargs -n 1 gsutil ls -L -b | grep -B 1 ‘Storage\|Location constraint’

This command lists your buckets, their locations, and storage classes.

3. Combine the Information

To identify areas where new charges will apply, cross-reference the buckets accessed by BigQuery with their locations and storage classes.

In our sandvox environment we ran step 1 with the query:

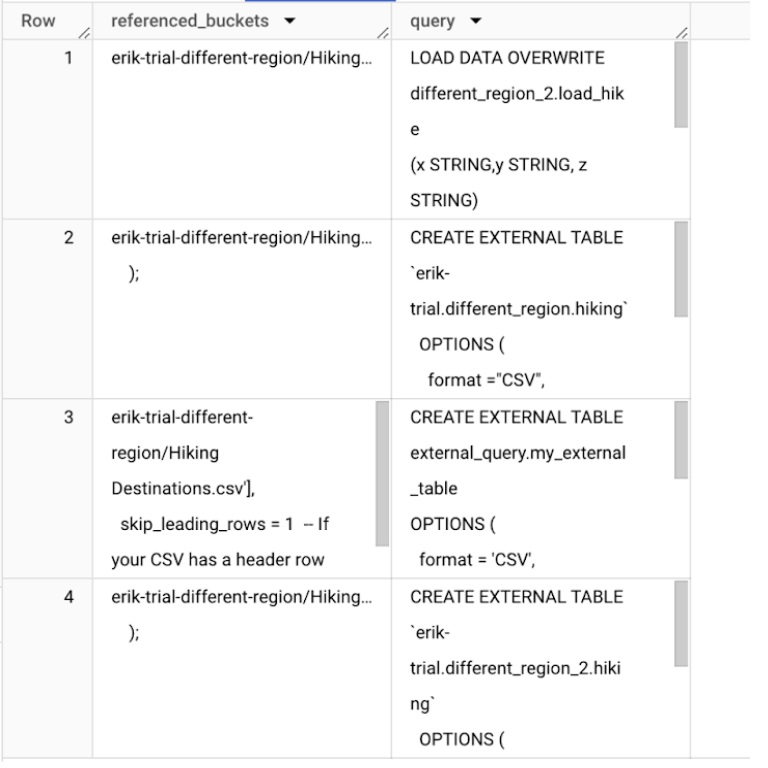

SELECT SPLIT(query, 'gs://')[1] as referenced_buckets, query

FROM erik-trial.`region-europe-north1`.INFORMATION_SCHEMA.JOBS_BY_PROJECT

WHERE query like '%gs://%' and error_result.reason is nullWhich returned the following:

As you can see, the column referenced_buckets starts with the name of a bucket referenced by a past query. Looking at the query column, we see this includes Load and External jobs.

The only bucket referenced by this project and region is erik-trial-different-region, let’s remember this bucket for the next step.

Keep in mind that we only ran this for the region region-europe-north1. To include more BigQuery regions this has to be rerun for each region.

To get more information about the buckets in our project, we ran step 2 in the Cloud Shell which returned the following:

As you can see, our bucket from step 1 is here. It has the Storage class Standard and is in the location “EU”.

Based on the billing updates, from November users will be charged for the following SKU:

If the charge is significant, we should consider moving our bucket to europe-north1 or our BigQuery dataset to europe-west4. Going forward europe-west4 will be treated equal to EU when it comes to the new charges and won’t therefore be affected by inter region transfer costs.

Taking Action

Armed with this knowledge, you can take proactive steps to prepare for the BigQuery pricing changes and manage your Cloud Storage costs. Here are my recommendations:

- Optimise Data Placement: Consider relocating frequently accessed data to the same region as your BigQuery datasets to avoid inter-region transfer fees. If you are importing data from remote Cloud Storage locations, store the data in a Cloud Storage bucket that is in the same region as the destination BigQuery dataset. For example:

- If you are reading data into BigQuery US multi-region, consider placing your Cloud Storage bucket in us-central1.

- If you are reading data into BigQuery us-west1, consider placing your Cloud Storage bucket in us-west1.

Additionally, if you want to co-locate an existing Cloud Storage bucket to your BigQuery region, you can use Storage Transfer Service.

- Review Storage Classes: Evaluate if savings from storing data in Nearline, Coldline, or Archive classes outweigh retrieval fees. Consider enabling Autoclass on the bucket.

- Monitor Usage: Keep an eye on your BigQuery usage patterns and adjust your data storage strategies as needed.

Read more about the affected SKUs and BigQuery pricing on the Google Cloud page.

Need help navigating BigQuery billing changes? Contact our team of Google Cloud experts today!