During KubeCon 2019 in Barcelona I was lucky enough to get hands-on experience with Google Cloud’s latest and greatest: Anthos. The room was packed with attendees as of 8AM and guidance provided by a dozen of knowledgeable Googlers who have worked close on or with Anthos. Still in whitelist alpha at the time of writing, Anthos gives you the freedom to manage your hybrid fleet of Kubernetes cluster.

This blog post will not go into all the sales topics and marketing campaigns running around Anthos. It will instead focus on the technical aspects of Anthos and what it can do for you. Let’s go full steam ahead!

Anthos is to Kubernetes what Kubernetes is to containers. And more.

What it’s not

It might be good to start with scoping what Anthos is not. It’s not a way to bring Cloud Services to your on-prem environment. Don’t expect to run BigQuery, Container Registry or App Engine on your own hardware. With one exception, being GKE on-prem. This neat service allows you to leverage your on-prem hardware to run Kubernetes, while benefiting from the advantages of a managed service like Google Kubernetes Engine.

So what is it?

Anthos is to Kubernetes what Kubernetes is to containers. And more. When you create a Deployment in Kubernetes, K8S makes sure your Pods always match the desired state. A pod crashes? Kubernetes will schedule a new one, to get from the actual state into the desired state. Kubernetes does that in a declarative way, as an end user you probably let Kubernetes know what you’d like by using YAML files.

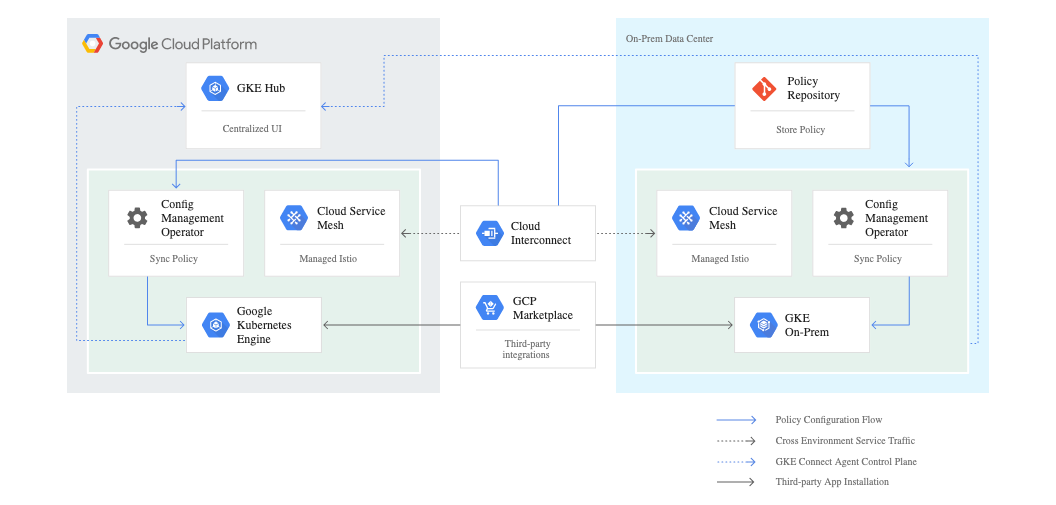

Just the same, Anthos is a platform that will manage your Kubernetes clusters for you. It does 3 things, and it does them well:

- Infrastructure provisioning in both cloud and on-premises.

- Infrastructure management tooling, security, policies and compliance solutions.

- Streamlined application development, service discovery and telemetry, service management, and workload migration from on-premises to cloud.

You tell Anthos how you want your Kubernetes clusters to look like, and it will make sure your demands are met. The great thing here is that those different clusters are not limited to GKE clusters. With the Anthos GKE Connect Agent installed on your Kubernetes cluster, that cluster can reside anywhere, as long as it can connect to Anthos.

So how does it work?

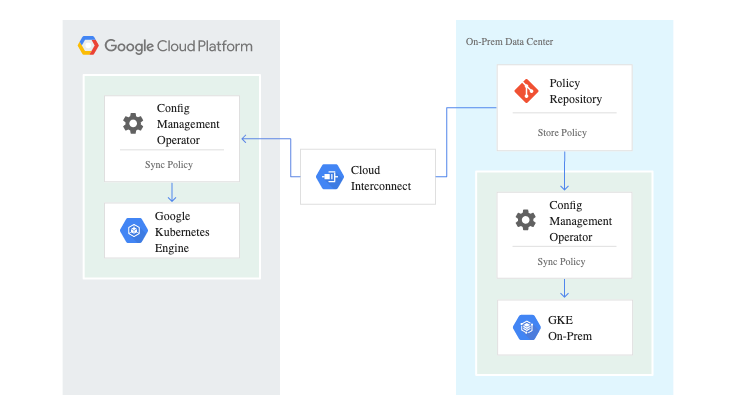

Great question! I was just getting to that. You start with a Git repo (either Github or GSR for now) where you manage your YAML files, the desired state of your environment. This GitOps way of working is getting more and more traction, so it’s great to see this as the default flow in Anthos.

With Cluster Selectors, you have the option to label specific clusters and have specific policies apply to that cluster (e.g. use 1 GKE and 1 on-prem for DEV/TEST, and another GKE cluster for Prod), or have the policies apply to all clusters. The great thing here is that these concepts build on what you already know and love in K8S, like a service targeting pods using label selectors. This makes Anthos feel like a natural extension, rather than a complete new concept to learn.

With Cluster Selectors, you have the option to label specific clusters and have specific policies apply to that cluster (e.g. use 1 GKE and 1 on-prem for DEV/TEST, and another GKE cluster for Prod), or have the policies apply to all clusters. The great thing here is that these concepts build on what you already know and love in K8S, like a service targeting pods using label selectors. This makes Anthos feel like a natural extension, rather than a complete new concept to learn.

We’ve already mentioned the Anthos Agent that is installed on each Kubernetes cluster. This Agent will be responsible for communicating with Anthos Hub, the managed service on Google Cloud. It will interrogate the Hub to check the desired state, and make changes where needed.

Leveraging the Anthos Agent in each cluster, Google Cloud does not need credentials to your cluster, no ‘keys to the kingdom’ on any of your clusters. Instead, the Agent will interrogate GKE Hub in Google Cloud to requests the desired state and act accordingly. The fact that GKE Hub has no access to your clusters is a great security benefit, limiting the blast radius if anything goes wrong.

Thanks to the Service Mesh provided by Istio all workloads in all clusters have the potential to talk to each other, if allowed. For a single cluster, the regular DNS can be used to resolve the .local domain. To reach workloads running in another cluster, you leverage ServiceEntries. A separate DNS is managed on each cluster to forward any traffic that is external to the cluster, referred to with a .global domain. To resolve these .global DNS queries, you leverage Stub Domains.

Thanks to the Service Mesh provided by Istio all workloads in all clusters have the potential to talk to each other, if allowed. For a single cluster, the regular DNS can be used to resolve the .local domain. To reach workloads running in another cluster, you leverage ServiceEntries. A separate DNS is managed on each cluster to forward any traffic that is external to the cluster, referred to with a .global domain. To resolve these .global DNS queries, you leverage Stub Domains.

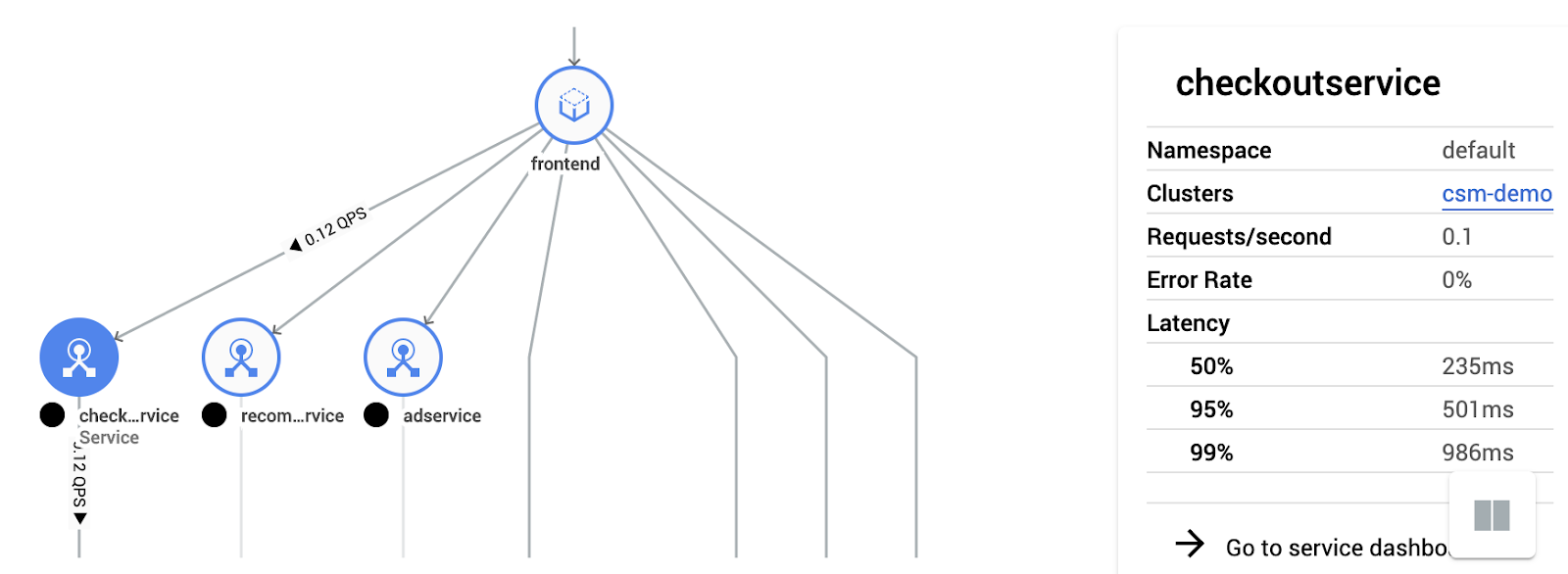

Can I get a UI please?

Having several clusters being controlled by Anthos frees you from all the operational hassle around it. But it might also mean you get mentally disconnected from what’s running in there. Luckily, based on Istio, we have Anthos Cloud Service Mesh. With CSM, you focus on the workloads running within your clusters. Based on Istio and Stackdriver, CSM provides unique insights into your workloads. One great feature of CSM is the Topology view that helps you visualize the workloads running in your cluster and how they’re connected.

Next, CSM also has the capability to provide you with security improvement suggestions (for example, detecting unsecured connections).

Finally, Google is well known for their industry-leading role in SRE. There are 2 great books out there which help you understand the concepts and how to bring them into practice. One big gap here is that while all of this is great, there was no product or solution that this all into practice. But now CSM makes this a simple, straightforward task. It provides you with out-of-the-box SLI’s like errors and latency and allows you to create SLO’s based on them. With a few clicks, you can get an SLO up and running in no time.

To make it more concrete, think of latency as the SLI on which the following SLO would be based: “95% of all 5min windows in 1 rolling day meet 95% latency”, while setting the latency threshold to for example 600ms. In line with the SRE guidelines form Google, CSM will help you keep track of these SLO’s and maintain your error budget, are core SRE principle.