Around mid-December 2018, Google released a brief blog post announcing Cloud DNS Forwarding. The shortness of that blogpost stands in great contrast with the huge impact it can have in simplifying enterprise’s Cloud adaption. Let me explain you why.

What is Google Cloud DNS Forwarding?

In short, Google expanded Cloud DNS with the option to manage a private DNS environment however you want it. You can either rely on GCP’s DNS server (called Internal DNS for Compute Engine), add any DNS server as an additional one next to Google’s, or completely overrule GCPs DNS with your own DNS server. And there’s a lot of power in that last one.

So why is this such a big deal?

Before the release of DNS policy to list your own DNS server, it was a huge hassle to get around GCP’s own DNS server, mainly with Linux instances. If you wanted your Linux instances to know about your machines on prem, you could not simply change their resolv.conf because GCP is kinda stubborn and resets it every 24 hours. You could also not modify the Internal DNS used by the metadata server in any way. Thanks for that…

There are a lot of workarounds, including installing a BIND DNS server set to forward to your actual DNS server on every instance. Not quite manageable for huge workloads if you ask me. On Windows servers it was easier, as you can just popup the good old networking config and change the DNS IP servers there. But you still had to do that on every server. Not very manageable if you’re looking at Cloud for the so-called ‘managed services’. Ironically, you even had more to manage!

“The alternative name servers become the only source that GCP queries for all DNS requests submitted by VMs in the VPC using their metadata server, 169.254.169.254”

Now Google has allowed to create an outbound DNS policy to use an alternative name server as the sole source of truth. From the docs: When you do this, the alternative name servers become the only source that GCP queries for all DNS requests submitted by VMs in the VPC using their metadata server, 169.254.169.254

The word ‘only’ is key here. It finally takes control away from the Compute Engine Internal DNS monster where you had zero control over.

No longer you need to rely on bitcoin_miner-539.c.my-awesome-project.internal DNS names and not being able to add your own DNS entries.

“You now have the flexibility to go either way. Have Google manage your entire DNS automatically, add a secondary server, or take complete control”

So how should I use it?

As always: it depends. You now have the flexibility to go either way. Have Google manage your full DNS, add a secondary server, or take complete control. You can add your existing Active Directory DNS either as a secondary DNS by creating a forwarding zone – for example by letting Google manage the Compute Engine Instances but relying on your Active Directory for your on-prem environment – or give full control to your Active Directory by enabling an outbound forwarding policy, which will totally bypass the Compute Engine Internal DNS.

Important: A DNS policy that enables outbound forwarding disables resolution of Compute Engine internal DNS and Cloud DNS managed private zones.

This is a super clean way as the metadata server, for which every Compute Engine instance is automatically configured to go to for DNS resolving, will respect your DNS preferences. No more need to change anything on a Compute Engine instance, but you can centrally manage your DNS environment with Cloud DNS.

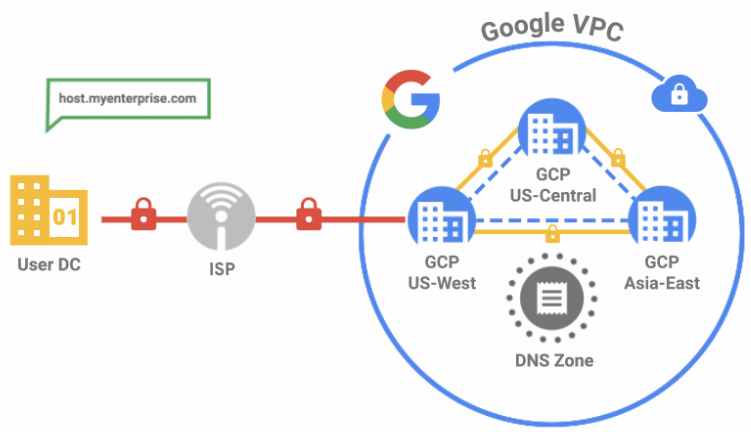

Full enterprise-ready: Shared VPC

Add to that the use of Shared VPC and manage your entire VPC (incl VPN, DNS and firewalling) in the host project. This gives the service projects peace of mind as they don’t even have to care about DNS and will automatically enjoy the freedom of the setup in the host project. This way, the networking team can take care of the host project, while hiding away all this complexity for operational colleagues who only need to manage the VM’s, not the network, in the services project.

They just create an instance in the service project using the Shared VPC and BOOM, they are automatically configured to access the DNS over the VPN connection managed in the host project. Wanna go loco? Configure all of this using Deployment Manager for a full Infrastructure-as-Code approach.

Another neat feature is the fact that it can also work the other way around, allowing your on-prem instances query Google Cloud Internal DNS, using Inbound DNS forwarding. So you really have the full flexibility now to fully go Hybrid.

Closing remark: obviously, either a VPN or Interconnect is needed to connect to your on-premise Active Directory as to ensure you stay within your private network and keep everything closed from public access. We wouldn’t want the world to query our internal DNS for ‘master.finance.fourcast’, now would we.

Need some help? Feel free to reach out, at Devoteam G Cloud we have the expertise to make your project a success.