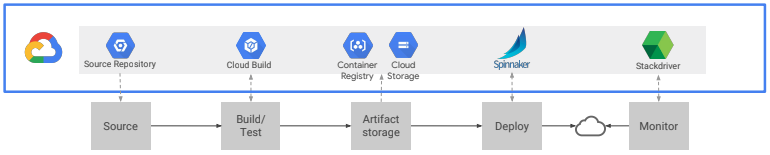

We recently finished a project at a client where we revamped its entire DevOps using “Google Deployment Manager”, “Google Cloud Build” and “Spinnaker”. While this was centered on Kubernetes engine (which is the way to go if you want to leverage the full benefits of the Cloud for your application), this framework could be used for deploying monolith applications on VM, deploying Cloud Functions and apps on App Engine.

There is a multitude of tools outside for carrying out devops; however we are convinced that the combo of GCP and open source tools described in this article is one of the most efficient and user-friendly.

The Start: Git Architecture

Let’s start by having a high overview of the architecture used. An architecture drawing is worth a thousand words here.

It all starts with the codebase and having a thorough git strategy is one of the prerequisite. GCP has now its own version control module called source repository and it can mirror instantaneously your code from Bitbuckets and Github. In our case, the client had its code on Bitbucket and we did mirror to source repository. Having your codebase on GCP makes it easier for integration with Cloud Build as well for debugging on Kubernetes. On top of this, it has a decent Free Tier.

As mentioned, you need to have a git strategy in place before implementing a CI/CD pipeline. There is ton of literature outside and for this project we opted for the successful git branching model with use of the git-flow extension. Then there is the debate on how your team should collaborate (do they need to rebase, merge fast-forward, squash, etc.). This really depends on the size of your team and how they have been used to work in the past. Our approach has been:

- One feature — One branch

- Feature finished →squash all the commits

- Pull request

- Admin merges the feature into the develop branch

Having your codebase on source repository and your git strategy well defined, you can now start your CI/CD pipeline to let your developers focus only coding and being able to test and deploy their applications in a matter of a few minutes. The tools used also facilitates the life of your devops team in the sense that their work moves from a mix of manual steps, debugging and hot fixes into a job of monitoring and insuring smooth working of the different tools.

This framework frees your developers from non-added value tasks and empowers your devops team with more interesting and strategic tasks.

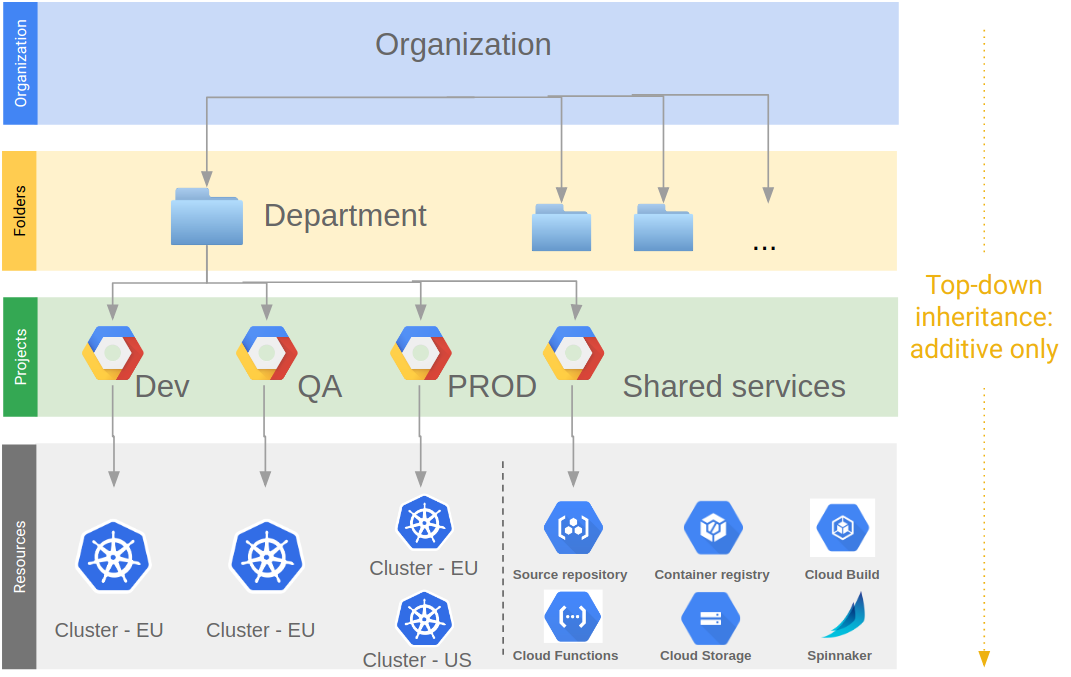

Project Architecture

Before deep diving into the CI and CD tools, let’s talk about the project architecture as this has been something of great added value for the customer. We have opted for a separation of the different environments into different projects, with one additional project for all the shared services.

This latter project is concerned with all the devops and will host the container images, the Cloud build triggers, K8s manifests, the cluster with spinnaker on it, etc. This separation of projects and one dedicated project for devops permits to have very granular access policy and gives clarity to the purpose of each project. Nothing is mixed and the idea behind it is “one project, one purpose”.

Continuous Integration with Cloud Build

Let’s now focus on the Continuous Integration part with Cloud Build. Its only objective is either:

- bake a container image on Google container registry

- copy new K8s manifest files on Google Cloud Storage once

Both these actions happen once a certain push has been done on the codebase that includes respectively change in the application or in the K8s manifest files (Cloud Build will listen to these changes through different triggers). Cloud Build works with a list of steps and each of these steps define an action to be done.

The main steps that are encountered are fetching the codebase, running unit tests and building the image. Basically, any actions can be performed through Cloud Build as each step is a container application on its own. Once cloud build has finished all its steps, the new image on GCR or the new K8s files on GCS will send a notification to Pub/Sub that will have Spinnaker as a subscriber.

Pub/Sub, GCP message-oriented middleware, is the communication pillar between the CI and the CD part.

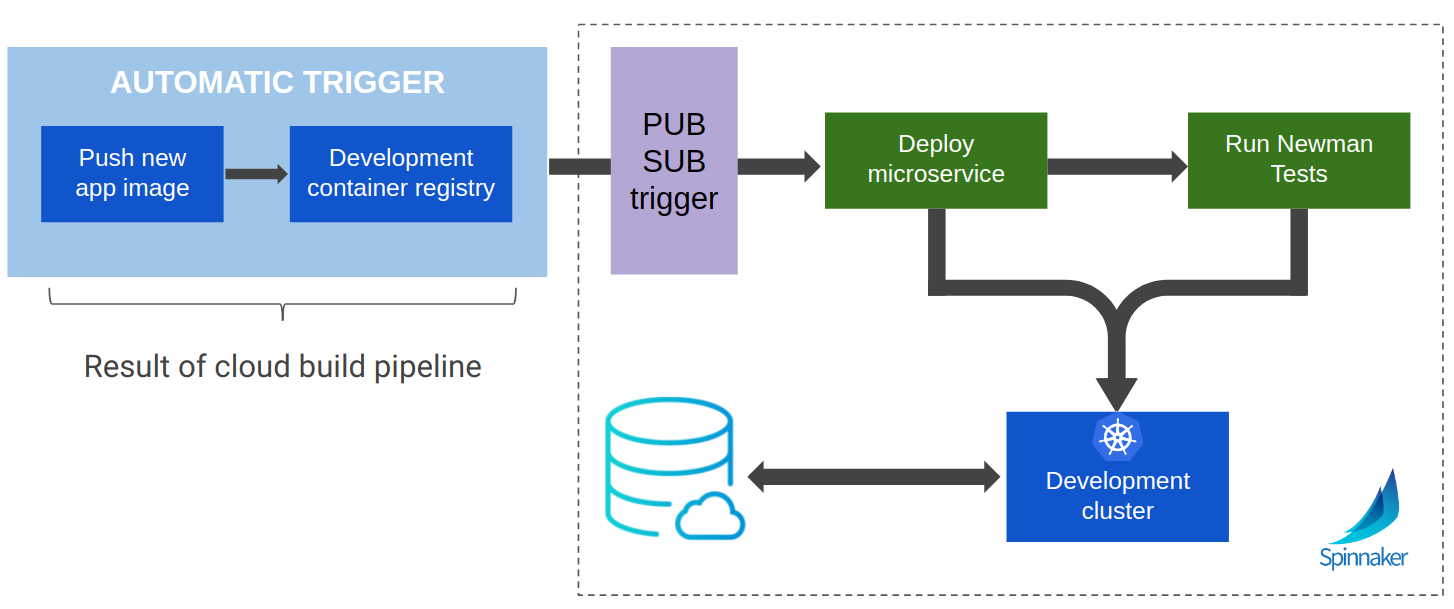

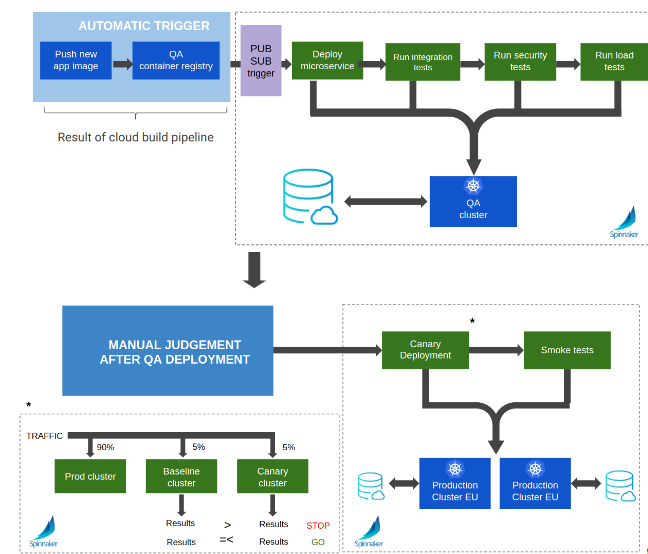

Deployment

Let’s now move on to the deployment part. This is done through to the open-source software Spinnaker that is deployed on a cluster on the shared services project. Once deployed, you can easily configure the UI to be accessible on a public domain with oauth2.0 access (see tutorial). Spinnaker user-friendly UI make it very simple to create pipelines and deploy on your different GCP projects once you have passed some configuration setups. Two pipelines have been developed as follows:

- Dev pipeline that deploys your image on the cluster of the dev project. It will also run some integration test and in case any of the test fails, the deployment stops and reverts to the previous image.

- Prod pipeline that first starts by running a multitude of tests on the image deployed on the cluster of the QA project. The team can then test manually the app on the QA project and once they are satisfied, they can approve manually on Spinnaker and this will trigger canary deployment on the cluster of the prod project. The canary deployment is an advanced kind of deployment that will keep sending 90% of the traffic to your previous version, sends 5% of the traffic to a fresh baseline cluster with the previous version and finally sends the remaining 5% to a cluster with the new version (called canary cluster). Creating a brand new baseline cluster ensures that the metrics produced are free of any effects caused by long-running processes. The canary analysis then analyzes a lot of metrics through the Kayenta library between the baseline cluster and the canary cluster. In case the results are satisfying, 100% of the traffic is sent to the new version. Otherwise, the whole traffic goes back to the previous version.

- Feature pipeline: a major complaint from developers with K8s is the difficulty to test quickly a feature. One solution is to create a CI/CD pipeline for code pushed to a feature branch. This will bake an image in a specific GCR subfolder and this spinnaker pipeline will then deploy the image on the cluster of the dev project with namespace of the feature branch. Developers can then quickly test their changes on the dev environment without impacting the normal app that will be deployed on the default namespace.

Wait, where is IaaS in all this and why should I need it ?

IaaS, through Google Deployment Manager, is the practice of making the configuration of your infrastructure reproducible, scalable, and easy to review by describing it using code. Infrastructure as code comes from the realization that infrastructure is also “software”, which is particularly true for the public cloud.

Basically, it is translating any UI actions that you do in the GCP UI (create a cluster, set IAM policy, create a bucket, etc.) into code.

Once your infrastructure changes, you just change it in your code and you then update it via a gcloud command.

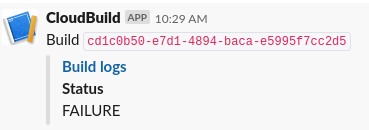

Notifications

Various tools have been described through this document and things can get messy if we don’t have one notification tool that integrates it altogether.

This is where Slack comes to the rescue, thanks to static and dynamic integrations with all the tools mentioned in this document. From git integration, where it informs you about pull requests that you can accept dynamically, to Spinnaker manual judgement: you can really develop any kind of notifications using Slack apps and Google Cloud Functions.

Want assistance developing this kind of evolved CI/CD pipeline with your K8s application ? Our experienced developers will guide you through all the different steps.

The consulting can go from a simple architecture design with tips to a full customised implementation.

{{cta(’12bd6ee0-e547-4448-b210-24fbf9fe02a6′)}}