Written by Jason Quek, Global CTO at Devoteam G Cloud

In recent days, there have been alarm bells and calls for preparation by the populace to prepare reserves in the event of disruptions to services. I purposely avoid the “w” word as I do not want to seem alarmist and most people cannot believe it will happen in this time and age in Sweden. One thing is about total defence which has been drilled into me during my time in the military in Singapore. As a software engineer, the thing I can do at least is harden the cyber security posture of applications and services which have become the backbone of many customers. I also write the below based on virtual/physical machine workloads due to the overwhelming amount of customers still on virtual machines or physical machines.

Here are a few must-dos that can and must be acted on today

1) Update all operating systems to the latest versions, doesn’t matter if it’s Windows, Linux or Mac-based as the first step.

Roll out an OS patch management service to ensure up-to-date patching within the next 10 days, with prioritization of critical patches by putting some sort of SLA on the team, e.g. all 9+ above items should be fixed within 2 hours in business hours. Make sure your IT team makes an inventory of their OS and subscribe to the feed on CVE alerts. https://www.cvedetails.com/

If you are on Google Cloud – Enable Security Command Center, the CVE details appear thereafter scanning your inventory. Use VM Manager to automate patching for your virtual machines. https://cloud.google.com/compute/docs/vm-manager

2) Update all applications to the latest versions.

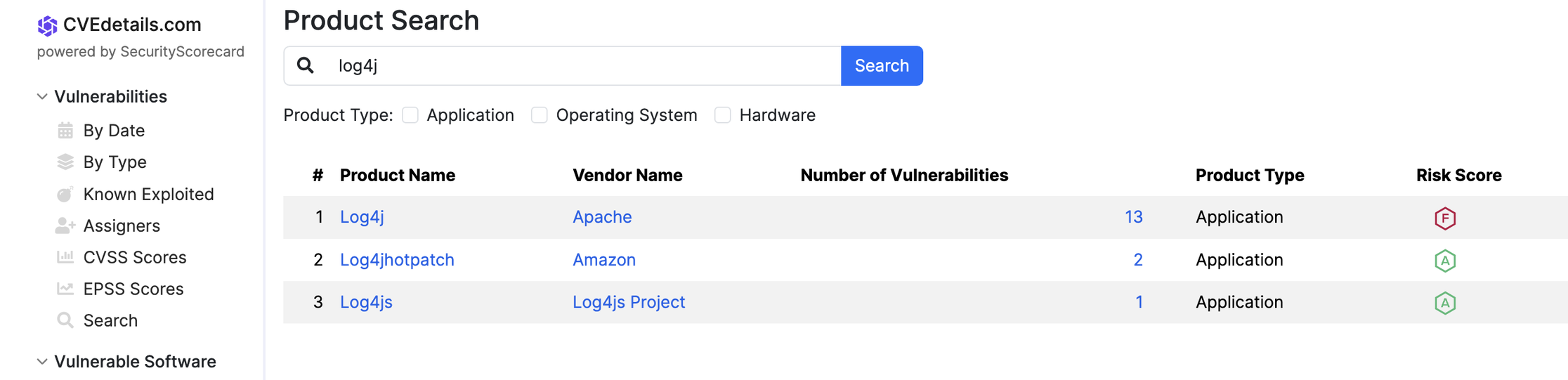

Use the same method as above for CVE details, but identify the software used in your services, like Apache, Log4j, self-hosted Jira, Magento, Jenkins and WordPress (just to name a few). Pay special attention to add-on plugins, those often introduce vulnerabilities as they are created by third parties and have open security holes. I have seen many customers affected by an attack through that vector which were surprised that the attack was not covered by support, because it was via a plugin purchased via the software’s marketplace.

If you can use a managed service, it helps a lot due to the support provided and automated updates that the product provider should execute in the event of zero-day exploits.

3) Implement Multi-Factor Authentication and rotate keys and passwords regularly

This is one of the simplest but time-consuming things that greatly improve security. Using Google Authenticator, Yubikey or Passkeys all creates an additional step for external actors to get access to your systems. The thing about cybersecurity is about creating defence in depth, multiple layers through which they have to get through before they give up. It is interesting that this was a strategy in warfare even before computers were invented. https://en.wikipedia.org/wiki/Defence_in_depth https://en.wikipedia.org/wiki/Defense_in_depth_(computing)

Service account keys, SSH keys, VPN passwords, regular passwords, all need to be rotated regularly. If you haven’t rotated it since you set it up (maybe multiple years ago), bite the bullet and do it once as a good start. Then set up a schedule to do it every 1-3 months. I have seen this attack both on-prem and on the cloud more times than I care to count. Even better if you can go without service account keys, but not all applications support Workload Identity Federation https://cloud.google.com/iam/docs/workload-identity-federation. One of the greatest inventions that 10X security but requires additional configuration to work. Make the attacker target Google instead of you (Google can handle it).

4) Use a vulnerability scanner

What you don’t know you can’t fix. There are open source versions https://owasp.org/www-community/Vulnerability_Scanning_Tools and there are paid versions, and there are Cloud based versions like https://cloud.google.com/security/products/security-command-center?hl=en. Start with one at least, and when that is in place, fix the remediations, and then evaluate what is best for your long term.

5) Take backups and build Golden Images

If your environment has not been compromised before (or newly built), take backups which are immutable and offsite. There is even a recommendation to make offline backups, which may be necessary for the highest levels of security, but I find that having immutable backups on an isolated cloud service is mostly good enough. E.g. on Google Cloud Storage with object or bucket locks. Test that your restore processes work so that you can get back to a functioning state, and rehearse that every 6 months so that your engineers are fresh on it. Note that this is a step taken after the service account keys are protected and rotated, as an attacker can target and destroy your backups too.

Summary

If your environment has been compromised, assume the worst, and take the chance to build Golden Images of virtual machines. This means setting up a new VM with all necessary dependencies and patches and making an immutable copy of that VM. Every new rollout will be based on that Golden Image, and your team should make new versions of that Golden Image. This allows you to destroy and recreate infected virtual machines with confidence.

A lot of the security items I mentioned above can be time-consuming, which creates a situation where external actors can start to exploit security holes borne of laziness. If the team is overstretched on delivering new functionalities, it is important to then pay someone to do this instead, but still verify it is being done to ensure accountability.

By no means does this guarantee you have a foolproof system, but it will create much more layers than you may have today.

For a dive into more of the Cloud Security tips, check out my session at Cloud Next called “Check your Security Posture”