Now that the basics of GCP and MLOps have been covered, this section will go over how to combine them to set up an end to end MLOps system on Google Cloud. This section will follow the workflow starting from the ML Engineer’s local machine up until the model is up and running in production.

4.1 Data Exploration and Experimentation

Initially, data on GCP will be stored either on Cloud Storage or Big Query for analysis. ML engineers will start doing data exploration to identify useful features for the model they want to build. This can be done either on a local machine (in which case one should be careful about data exfiltration risks) or on a Workbench instance, in which case the engineer is already working inside of the Google environment.

Once features have been identified, ML engineers will probably start prototyping different types of models to find out which approaches work and which do not. Vertex AI Workbench allows them to leverage powerful virtual machines for local training, or they can submit Vertex AI Training jobs instead. Using AutoML and/or pre-built APIs is also a standard practice to quickly identify the baseline performance for a given use case.

After selecting which models to further test, it is time to start working on a training pipeline, rather than local experimentation.

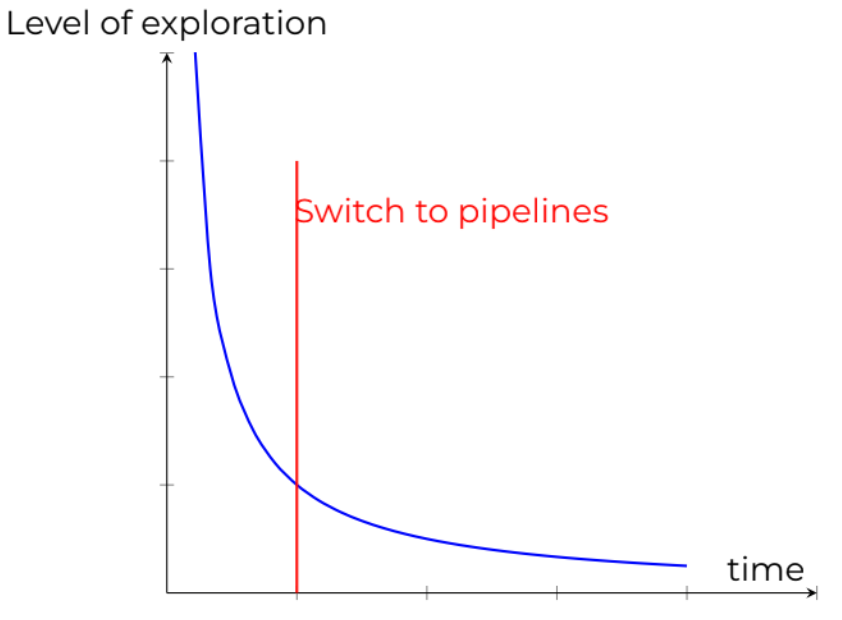

Figure 5: graph showing when to switch to Vertex AI Pipelines

The development of an ML pipeline usually starts after most of the exploratory analysis has been carried out and the general shape of the model is defined, as shown in figure 5.1. In general there is a tradeoff between development velocity and solution robustness and quality. When to make this switch will depend on the type of problem and individual preference.

4.2 Vertex AI Feature Store

Vertex AI Feature Store is a great tool allowing you to store, serve and monitor ML features at scale. It especially fits the overall MLOps picture as it allows ML engineers to track the evolution of features over time. Feature Store can create alerts for when your ML features drift from their original distribution. This is especially important in ML features, as data arrive continuously. At any time, the features may change

from what they used to be at training time, eventually leading to model deterioration. Feature drift is thus a critical event to detect, so that models can be retrained and ensure business continuity. Also, feature drift may be a sign that the architecture of the ML models need a change. For this reason, feature drift must not only trigger a retraining of the model, but also alert relevant technical and business profiles within an organization.

4.3 Pipelines on Vertex AI

Once model selection has been narrowed down to a couple of possible architectures and data pre-processing is in a more or less stable state, it is time to properly structure the code.

The code structure depends heavily on what type of pipeline tool is used. Most often, code is split up into components that perform one specific function. In case code needs to be used across multiple components, this code can be placed into a private Python package that is then installed in the necessary components. As explained, components are executed as Docker containers. This means that each component runs in its own environment, which can be customized at build time with the packages that are needed for the specific task.

An important step when designing a component is making sure that its inputs and outputs are well defined. Not only does this ensure that the resulting artifacts are stored properly in Vertex AI Metadata, but it is also required in order to pass objects from one component to another and make them overall reusable. The dependencies between components define the execution graph of the pipeline, therefore the order in which tasks are executed. It is important to verify that the components are not circularly dependent, otherwise it will not be possible to compile them into a pipeline.

Once the pipeline is defined, it can be written as a pipeline template and stored in Artifact Registry. Just like components, pipelines can be created in a reusable fashion. For example, one may create a general pipeline template to tackle all binary classification use cases, performing all steps of the machine learning workflow, including hyperparameter tuning to ensure that the best possible model ends up being created.

4.4 Model Training

In most cases, a pipeline will contain at least one training component. In order to benefit from the resources available in Vertex AI training, the training component should instantiate a Vertex AI training job, either by running an AutoML training job or by launching a Vertex AI custom training job in a pre-built or custom container.

4.4.1 AutoML Training

AutoML models are suitable for many use cases concerning tabular, image, video or text data. AutoML training jobs do not require any custom training script, nor is it necessary to perform hyperparameter tuning or cross validation. However, only little customization is available over the training resources. Often, you can only control the total budget for a given training job. The trained model will automatically

be uploaded to the Model Registry and will be available for deployment. Optionally, AutoML models can be exported to be used on an edge device or in an on-premise environment.

The main benefit of AutoML is that it enables less ML knowledgeable people to train their own models without too much effort. It can also solve as a useful tool to create strong baseline models.

4.4.2 Custom Training

Training a model with a custom application enables the ML engineer to have full control over the process. The training job is run in a container, either pre-built or custom. In order to run training in a pre-built container, it is required to provide a script containing the training code that will be executed during the job. When working with a custom container, the training application should be included in the container and the Vertex AI job should only specify the command to run when launching the container. Both custom training methods allow the user to fully customize the training environment as the resources (potentially, distributed) to be allocated for the task can be specified in the Vertex AI job definition. The ML engineer should ensure that cross validation is performed, if needed. This can be done as part of the application or as part of the pipeline execution. After training, it may be necessary to upload the model to the Model Registry in order to deploy it to an endpoint.

4.4.3 Custom Hyperparameter Tuning Training

A particular type of custom training job is the hyperparameter tuning job. Although it is possible to implement a custom tuning application as a component, the most effective procedure to choose the best hyperparameters for a model is to use the tool provided by Vertex AI, namely, Vizier AI. It is a black-box hyperparameter tuning framework that is able to achieve greater performance over diverse use cases, using notably less compute resources.

A hyperparameter tuning job can be instantiated starting from any other custom training job, with the addition of details over the parameters to be optimized, the metrics and the search algorithm. The tuning job then launches the training application multiple times in parallel, with different sets of parameters. After completion, the results of the optimization job will be available in the Vertex AI console, with extensive information over each training run allowing ML engineers to discover how their models behave in different settings.

4.5 Model Hosting

The last step in an ML pipeline is to deploy a trained model to an end point, so that it can be queried for online predictions. AutoML models handle prediction requests without the need for a serving application. On the other hand, custom models provide predictions through a serving container. Pre-built solutions are available but it is also possible to build a custom application to use for serving. Multiple deployment approaches are available on GCP, three solutions are compared below.

4.5.1 Vertex AI Endpoints

Vertex AI Endpoints is Vertex AI’s serverless model hosting service. Any model uploaded on Model Registry can be deployed to an endpoint. Custom serving containers are supported. In this case any prediction request sent to the endpoint is handled by a custom application. A given model can be deployed on multiple endpoints at a time, which can for instance be beneficial when a model should provide predictions through several endpoints with different specifications. Moreover, multiple models can be deployed to a given endpoint. In this case it is required to specify how the traffic is split among all models. This is particularly useful for A/B tests and canary releases of new model versions.

A Vertex AI endpoint can scale horizontally, thus adding nodes if more computational power is needed to provide predictions. However, the minimum number of nodes required at any given time is 1, therefore it is not possible for the endpoint to completely scale down to 0 prediction nodes.

Vertex AI Endpoints are designed to integrate well with other Ver tex AI resources, for this reason they provide more in-depth monitoring over model performances than other deployment solutions. Model Monitoring is available for models deployed on Vertex AI Endpoints, therefore providing a service to automatically detect prediction anomalies.

4.5.2 Cloud Run

Cloud Run is a serverless service that allows the user to run containerized applications. A serving application with a custom prediction script can then be hosted on Cloud Run to deploy a trained model, which does not need to be uploaded to Model Registry. This solution also allows autoscaling of nodes to accommodate variable utilization rates, including scaling down to 0 nodes unlike Vertex AI Endpoints. Cloud Run allows some customization over the resources that run the serving application. Cloud Run provides monitoring over container uti

lization, however, model monitoring is not integrated as in Vertex AI Endpoints and should be implemented separately, for instance by the means of custom metrics. Additionally, rolling out new model versions and A/B testing must be handled on the application side as Cloud Run does not contain any logic around ML models.

4.5.3 Google Kubernetes Engine

Google Kubernetes Engine (GKE) is a managed service providing Kubernetes clusters. It is used for deployment of containerized applications, therefore it can host a serving container for model predictions as with Cloud Run. In GKE, applications are run on a set of machines forming a cluster. This environment is fully customizable and can be configured to suit all requirements in terms of computational power, scaling and availability. Both horizontal and vertical auto scaling are available, although the autoscaler will not completely scale down to 0 nodes. Monitoring in GKE is limited to infrastructure and cluster metrics, so model performance should be assessed separately.

4.5.4 When to choose which option?

Vertex AI Endpoints is the default option, as it integrates best with all other Vertex AI services and it provides the best options in terms of features. However, it is not necessarily the most cost effective solution. This is due to the fact that it cannot scale down to zero.

For cases where budget is a concern, Cloud Run can be a better option due to the fact that it allows the model service to scale down to 0 instances.

Google Kubernetes engine can be interesting for teams that are already heavily using the GKE ecosystem. It can provide efficiency gains due to closer management of the underlying compute resources. The main downside is the extra infrastructure management effort.

4.6 Model Monitoring

Once a model is deployed, monitoring should be configured to ensure that the quality of the performance does not drop because of various drifts. Model Monitoring is available for tabular models, both AutoML and custom, that are deployed on Vertex AI Endpoints. Skew and drift detection are managed by Model Monitoring jobs, alerts can be configured to warn the user in the event of an anomaly. The job will monitor the activity of an endpoint and produce graphs that the user can visualize in the console to further analyze the results. Input data from prediction requests is saved and analyzed in BigQuery. In particular

In particular for skew detection it is necessary to also provide training data in Big Query in order to compare training features with incoming data. Moreover, for cases where your model needs to predict offline (batch cases), it is possible to detect drift at the beginning of your model development with a Vertex AI pipeline component using TensorFlow Data Validation in order to calculate the statistical distribution of your features. In the same spirit, we have the possibility to extend our monitoring capability with Explainable AI by introducing feature attribution methods and making sure that your model doesn’t drift in the attribution values.

4.7 Model transparency and fairness

Many industries face strict regulation over the usage of artificial intelligence powered features. Additionally, quickly-evolving technologies and international laws make it hard for non-lawyers to follow-up on the latest updates. For this reason, we advocate all ML engineers to adopt stricter internal practices. Mainly, this effort focuses on three equally important points:

- The use cases tackled

- The features being used

- The models that are developed

For sure, questions may be asked around the types of use cases that your company tackles. One must define proper boundaries of what they consider acceptable. Especially, be aware that some use cases can be used for other purposes than the one they were originally designed for. This of course concerns the sensitivity of the data that is being used, but also the concepts that could easily be transferred from one set of data to another.

Then, ML engineers should pay attention to which features of the data are used to train machine learning models. For instance, banks may not include the gender of a person to assess his/her credit risk score.

Finally, the type of model being used is of importance, as it may determine compliance with a given use case. One pain point in machine learning is to gain and retain user trust, and one way to alleviate this is to develop explainable models. These models are able to, thanks to various techniques, produce results and explain why it predicted it. This can vary from custom strategies, to feature attribution and even image segmentation. Importantly, model validation and evaluation play an important role in model fairness, as a peculiar analysis of the performance of your models may highlight important discrepancies in your dataset.

In general, we strongly encourage ML engineers to ensure full model transparency, at least within their own organization. This often comes with a detailed documentation explaining implementation choices, an argument of the features being used and why it complies with a company’s moral boundaries, as well as a complete dissection of the performance of the models.

As for tools, Vertex AI has Explainable AI that can automatically or manually provide explanations to models’ results. Additionally, Google’s What-If Tool provides a great toolkit for discovering caveats in ML models. Of course, those are only tools and will not replace strong AI ethics within a company.

4.8 A practical example

The previous sections described the process for developing ML solutions, from the design stage until production. To better understand how the steps should be assembled into one pipeline, a sample project is provided on GitHub1. The pipeline defined in this project implements an AutoML model predicting credit card default. The model is trained on the credit_card_default dataset, which is publicly available on BigQuery. The target variable default_payment_next_month can take values 0 or 1, thus making the model a binary classifier.

The pipeline contains four components:

- Creation of a tabular dataset, using BigQuery as a data source

- Training an AutoML model using the tabular dataset as input

- Creation of an endpoint hosted on Vertex AI

- Deployment of the trained model to the endpoint

The project also includes other files, which define the infrastructure of the GCP project. This ensures the reproducibility of the sample MLOps pipeline in any environment.